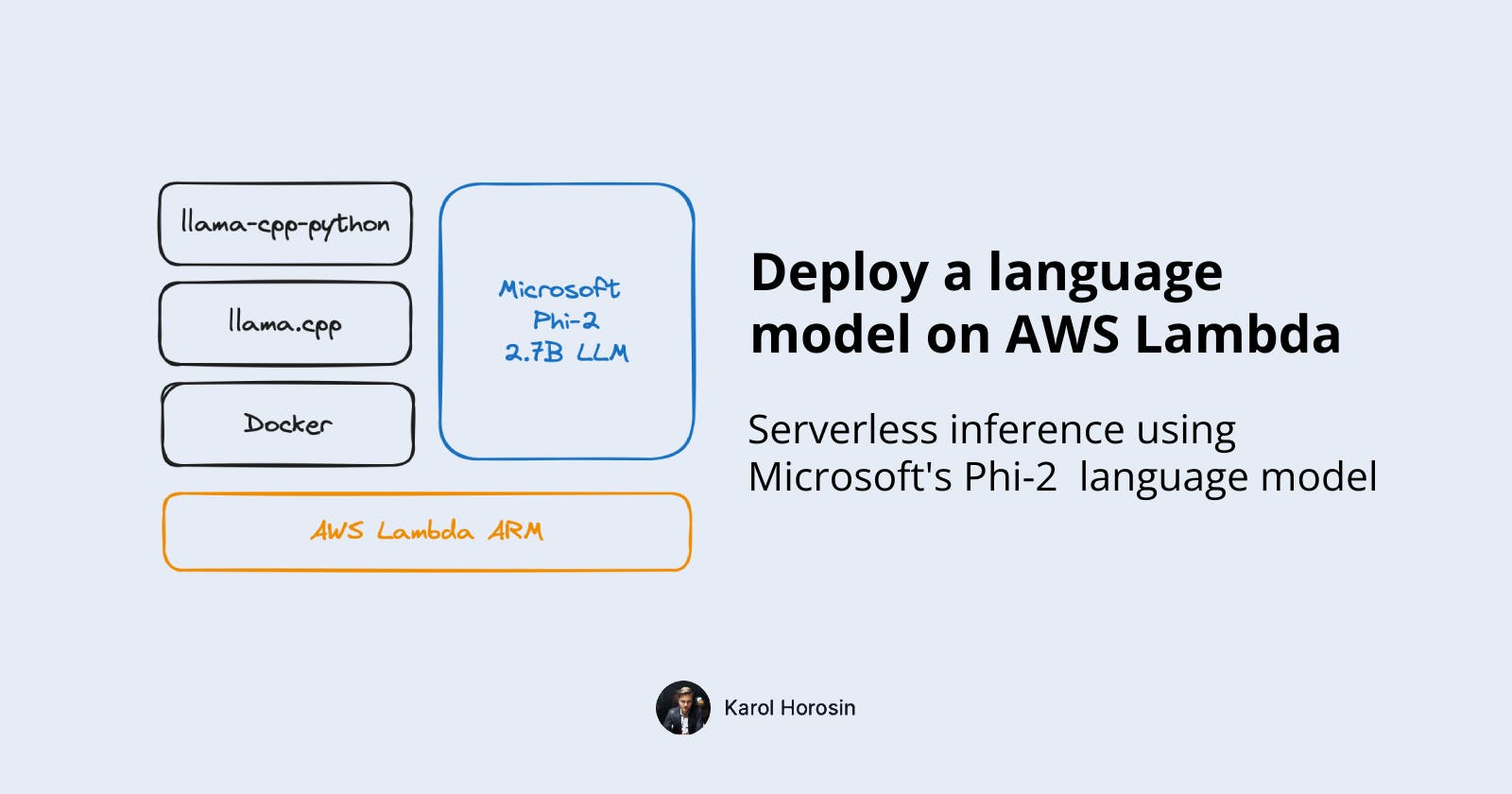

Deploy a language model (LLM) on AWS Lambda

Serverless language model inference using Python, Docker, Lambda, Phi-2 model. A Practical guide.

If you just want to see the code - see github.

What are we going to build any why?

LLMs are a new hot piece of technology that everyone is experimenting with. Managed services like OpenAI are the cheapest and most convenient way to use them. You can use their LLMs only for a small fee and for an approval to train new versions on your data (in some cases).

In certain applications, it is required to run an LLM on our own. You may want to process sensitive data (medical records or legal documents) or get great quality output in a language different than English. Sometimes you have a specialised task that doesn’t require expensive big models from OpenAI.

Open source LLMs are matching quality of big players like OpenAI but still require a lot of expensive compute resources. There are smaller models available that can run on weaker hardware and perform well enough.

As an experiment let’s deploy a smaller open source LLM on AWS lambda. Our goal is to learn more about LLMs and docker-based lambdas. We will also evaluate performance and cost to determine if any real-world applications are feasible.

For this project, we are going to use Microsoft Phi-2 model, 2.7 billion parameter LLM that matches quality of outputs from 13B parameter or more open-source models. It was trained on a large dataset and is a viable model for many applications. From my experience it hallucinates a lot but otherwise provides useful outputs. Its size is perfect for AWS Lambda environment.

We will download the model from Huggingface and run it via llama-cpp-python package (bindings to the popular llama.cpp, heavily optimised model CPU runtime). We will use smaller, quantised version but even the full one should fit in Lambda memory.

We will create an HTTP REST endpoint via lambda URL mechanism, that for a given call:

PROMPT="Create five questions for a job interview for a senior python software engineer position."

curl $LAMBDA_URL -d "{ \"prompt\": \"$PROMPT\" }" \

| jq -r '.choices[0].text, .usage'

(jq command used to decode returned JSON)

Provides LLM output alongside execution details:

Instruct: Create five questions for a job interview for a senior python software engineer position.

Output: Questions:

1. What experience do you have in developing web applications?

2. What is your familiarity with different Python programming languages?

3. How would you approach debugging a complex Python program?

4. Can you explain how object-oriented programming principles can be applied to software development?

5. In a recent project, you were responsible for managing the codebase of a team of developers. Can you discuss your experience with this process?

{

"prompt_tokens": 21,

"completion_tokens": 95,

"total_tokens": 116

}

For this tutorial you need to know basics of: programming, docker, AWS, Python.

I am using an M-series Mac, so as a default, we’re going to aim at deployment to ARM AWS Lambda, as it simply costs less for more performance. Instructions how to deploy x86 version are included.

Environment setup (AWS, Docker and Python)

I won’t dive into the basics here, if you don’t have the tools installed, please follow these resources.

You need AWS account and AWS CLI installed and configured.

Good tutorial from google search: tutorial.

Official docs, including if you’re using SSO: AWS official tutorial.

You also need docker: docker docs.

An IDE, I am using Visual Studio Code.

Set up lambda function with docker locally

Let’s start with getting local environment running. If you need more information than I included here, check out official AWS documentation.

We will store every file in single project directory, without subfolders.

First we will need a basic Python lambda function handler. In your project folder, create a file called lambda_function.py.

import sys

def handler(event, context):

return "Hello from AWS Lambda using Python" + sys.version + "!"

We will also create a requirements.txt file, in which we specify our dependencies. Let’s start with AWS library for interacting with their services, just as an example.

boto3

Then, we need to specify our docker image composition in Dockerfile file. Comments document what each line does.

FROM public.ecr.aws/lambda/python:3.12

# Copy requirements.txt

COPY requirements.txt ${LAMBDA_TASK_ROOT}

# Install the specified packages

RUN pip install -r requirements.txt

# Copy function code

COPY lambda_function.py ${LAMBDA_TASK_ROOT}

# Set the CMD to your handler

CMD [ "lambda_function.handler" ]

Finally, we will create a docker-compose.yml file to make our life easier when running and building the container.

version: '3'

services:

llm-lambda:

image: llm-lambda

build: .

ports:

- 9000:8080

OK, done! Make sure your docker engine is running and type in the terminal:

docker-compose up

The container should build and start. Our lambda is available under a long URL below (quirks of official Amazon image).

To test if the lambda is running, open another terminal window / tab and type in:

curl "http://localhost:9000/2015-03-31/functions/function/invocations" -d '{}'

Now you can come back to the tab you ran docker-compose in and type ctrl-c to stop the container.

You can put this test code in a bash script, as seen in the project repo. Also, it will be useful to create a .gitignore file, if you’re going to version control this project.

Run an LLM inside a container

Now that we have a working lambda, let’s add some AI magic.

To run an LLM, we need to add llama-cpp-python to our requirements.txt.

boto3

llama-cpp-python

To build it, we need to introduce a docker build stage. This is because default amazon docker image doesn’t include build tools required for llama-cpp. We are doing a pip install with CMAKE_ARGS="-DLLAMA_BLAS=ON -DLLAMA_BLAS_VENDOR=OpenBLAS" flags, in order to use multi-threaded optimisations.

The code below also includes downloading the model. Community hero, the Bloke, is sharing compressed (quantised, less computationally intensive but lower quality) versions of models, ready to download here. To make use of them, we can install the huggingface CLI and run appropriate command. You can switch the repository (TheBloke/phi-2-GGUF) and the model (phi-2.Q4_K_M.gguf) to whatever you like, if you want to deploy a different model.

RUN pip install huggingface-hub && \

mkdir model && \

huggingface-cli download TheBloke/phi-2-GGUF phi-2.Q4_K_M.gguf --local-dir ./model --local-dir-use-symlinks False

# Stage 1: Build environment using a Python base image

FROM python:3.12 as builder

# Install build tools

RUN apt-get update && apt-get install -y gcc g++ cmake zip

# Copy requirements.txt and install packages with appropriate CMAKE_ARGS

COPY requirements.txt .

RUN CMAKE_ARGS="-DLLAMA_BLAS=ON -DLLAMA_BLAS_VENDOR=OpenBLAS" pip install --upgrade pip && pip install -r requirements.txt

# Stage 2: Final image using AWS Lambda Python image

FROM public.ecr.aws/lambda/python:3.12

# Install huggingface-cli and download the model

RUN pip install huggingface-hub && \

mkdir model && \

huggingface-cli download TheBloke/phi-2-GGUF phi-2.Q4_K_M.gguf --local-dir ./model --local-dir-use-symlinks False

# Copy installed packages from builder stage

COPY --from=builder /usr/local/lib/python3.12/site-packages/ /var/lang/lib/python3.12/site-packages/

# Copy lambda function code

COPY lambda_function.py ${LAMBDA_TASK_ROOT}

CMD [ "lambda_function.handler" ]

Let’s modify our lambda code to run LLM inference. Some more work is required to get the prompt out of the request body in the production environment.

import base64

import json

from llama_cpp import Llama

# Load the LLM, outside the handler so it persists between runs

llm = Llama(

model_path="./model/phi-2.Q4_K_M.gguf", # change if different model

n_ctx=2048, # context length

n_threads=6, # maximum in AWS Lambda

)

def handler(event, context):

print("Event is:", event)

print("Context is:", context)

# Locally the body is not encoded, via lambda URL it is

try:

if event.get('isBase64Encoded', False):

body = base64.b64decode(event['body']).decode('utf-8')

else:

body = event['body']

body_json = json.loads(body)

prompt = body_json["prompt"]

except (KeyError, json.JSONDecodeError) as e:

return {"statusCode": 400, "body": f"Error processing request: {str(e)}"}

output = llm(

f"Instruct: {prompt}\nOutput:",

max_tokens=512,

echo=True,

)

return {

"statusCode": 200,

"body": json.dumps(output)

}

OK, time to test! My docker setup has 8GB of RAM assigned, but it should run well under 4GB.

Let’s rebuild the container and start it again. It will take more time, as the model needs to download (~1.8GB).

docker-compose up --build

This time, let’s test with a real prompt. First run will take more time, as the model needs to load.

curl "http://localhost:9000/2015-03-31/functions/function/invocations" \

-d '{ "body": "{ \"prompt\": \"Generate a good name for a bakery.\" }" }'

Example output:

Instruct: Generate a good name for a bakery.

Output: Sugar Rush Bakery.

{

"prompt_tokens": 15,

"completion_tokens": 6,

"total_tokens": 21

}

Deploy to AWS Lambda

With everything working locally, let’s finally deploy our LLM to AWS!

To execute the deployment successfully, the following steps are required:

Initial Setup: Determine necessary information such as AWS region, ECR repository name, Docker platform, IAM policy file, and disable AWS CLI pager.

Verify AWS Configuration: Optionally confirm that the AWS CLI is correctly configured.

ECR (Elastic Container Registry) Repository Management:

Determine if the specified ECR repository already exists.

If absent, create a new ECR repository.

IAM Role Handling:

Check for the existence of a specific IAM role for Lambda.

If not found, establish the IAM role and apply the

AWSLambdaBasicExecutionRolepolicy.

Docker-ECR Authentication: Securely log Docker into the ECR registry using the retrieved login credentials.

Docker Image Construction: Utilize Docker Compose to build the Docker image, specifying the desired platform.

ECR Image Tagging: Label the Docker image appropriately for ECR upload.

ECR Image Upload: Transfer the tagged Docker image to the ECR.

Acquire IAM Role ARN: Fetch the ARN linked to the specified IAM role.

Lambda Function Verification: Assess whether the Lambda function exists.

Lambda Function Configuration: Set parameters like timeout, memory allocation, and image URI for Lambda.

Lambda Function Deployment/Update:

For a new Lambda function, create it with defined settings and establish a public Function URL.

For an existing Lambda function, update its code using the new Docker image URI.

Function URL Retrieval: Obtain and display the Function URL of the Lambda function.

It is a simple but lengthy process to do manually, so I’ve created a script using AWS CLI. Feel free to modify the variables and run it yourself. The script is quite complex, you can do all the steps manually in the GUI if you want, apart from pushing the container.

If you are not doing this on M-series Mac, be sure to change linux/arm64 to linux/amd64.

#!/bin/bash

# Variables

AWS_REGION="eu-central-1"

ECR_REPO_NAME="llm-lambda"

IMAGE_TAG="latest"

LAMBDA_FUNCTION_NAME="llm-lambda"

LAMBDA_ROLE_NAME="llm-lambda-role" # Role name to create, not ARN

DOCKER_PLATFORM="linux/arm64" # Change as needed, e.g., linux/amd64

IAM_POLICY_FILE="trust-policy.json"

PAGER= # Disable pager for AWS CLI

# Authenticate with AWS

aws configure list # Optional, just to verify AWS CLI is configured

# Check if the ECR repository exists

REPO_EXISTS=$(aws ecr describe-repositories --repository-names $ECR_REPO_NAME --region $AWS_REGION 2>&1)

if [ $? -ne 0 ]; then

echo "Repository does not exist. Creating repository: $ECR_REPO_NAME"

# Create ECR repository

aws ecr create-repository --repository-name $ECR_REPO_NAME --region $AWS_REGION

else

echo "Repository $ECR_REPO_NAME already exists. Skipping creation."

fi

# Check if the Lambda IAM role exists

ROLE_EXISTS=$(aws iam get-role --role-name $LAMBDA_ROLE_NAME 2>&1)

if [ $? -ne 0 ]; then

echo "IAM role does not exist. Creating role: $LAMBDA_ROLE_NAME"

# Create IAM role for Lambda

aws iam create-role --role-name $LAMBDA_ROLE_NAME --assume-role-policy-document file://$IAM_POLICY_FILE

aws iam attach-role-policy --role-name $LAMBDA_ROLE_NAME --policy-arn arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole

else

echo "IAM role $LAMBDA_ROLE_NAME already exists. Skipping creation."

fi

# Get login command from ECR and execute it to authenticate Docker to the registry

aws ecr get-login-password --region $AWS_REGION | docker login --username AWS --password-stdin $(aws sts get-caller-identity --query Account --output text).dkr.ecr.$AWS_REGION.amazonaws.com

# Build the Docker image using Docker Compose with specific platform

DOCKER_BUILDKIT=1 docker-compose build --build-arg BUILDPLATFORM=$DOCKER_PLATFORM

# Tag the Docker image for ECR

docker tag llm-lambda:latest $(aws sts get-caller-identity --query Account --output text).dkr.ecr.$AWS_REGION.amazonaws.com/$ECR_REPO_NAME:$IMAGE_TAG

# Push the Docker image to ECR

docker push $(aws sts get-caller-identity --query Account --output text).dkr.ecr.$AWS_REGION.amazonaws.com/$ECR_REPO_NAME:$IMAGE_TAG

# Get the IAM role ARN

LAMBDA_ROLE_ARN=$(aws iam get-role --role-name $LAMBDA_ROLE_NAME --query 'Role.Arn' --output text)

# Check if Lambda function exists

FUNCTION_EXISTS=$(aws lambda get-function --function-name $LAMBDA_FUNCTION_NAME --region $AWS_REGION 2>&1)

# Parameters for Lambda function

LAMBDA_TIMEOUT=300 # 5 minutes in seconds

LAMBDA_MEMORY_SIZE=10240 # Maximum memory size in MB

LAMBDA_IMAGE_URI=$(aws sts get-caller-identity --query Account --output text).dkr.ecr.$AWS_REGION.amazonaws.com/$ECR_REPO_NAME:$IMAGE_TAG

# Deploy or update the Lambda function

if echo $FUNCTION_EXISTS | grep -q "ResourceNotFoundException"; then

echo "Creating new Lambda function: $LAMBDA_FUNCTION_NAME"

aws lambda create-function --function-name $LAMBDA_FUNCTION_NAME \

--region $AWS_REGION \

--role $LAMBDA_ROLE_ARN \

--timeout $LAMBDA_TIMEOUT \

--memory-size $LAMBDA_MEMORY_SIZE \

--package-type Image \

--architectures arm64 \

--code ImageUri=$LAMBDA_IMAGE_URI

aws lambda create-function-url-config --function-name $LAMBDA_FUNCTION_NAME \

--auth-type "NONE" --region $AWS_REGION

# Add permission to allow public access to the Function URL

aws lambda add-permission --function-name $LAMBDA_FUNCTION_NAME \

--region $AWS_REGION \

--statement-id "FunctionURLAllowPublicAccess" \

--action "lambda:InvokeFunctionUrl" \

--principal "*" \

--function-url-auth-type "NONE"

else

echo "Updating existing Lambda function: $LAMBDA_FUNCTION_NAME"

aws lambda update-function-code --function-name $LAMBDA_FUNCTION_NAME \

--region $AWS_REGION \

--image-uri $LAMBDA_IMAGE_URI

fi

# Retrieve and print the Function URL

FUNCTION_URL=$(aws lambda get-function-url-config --region $AWS_REGION --function-name $LAMBDA_FUNCTION_NAME --query 'FunctionUrl' --output text)

echo "Lambda Function URL: $FUNCTION_URL"

Now, let’s add proper permissions and run the script.

chmod +x deploy.sh

./deploy.sh

# AWS_PROFILE=your_profile ./deploy.sh

# if you want to use a different profile than default

As a result of the script, you should se the lambda function URL in your terminal. Let’s use it for a test.

PROMPT="Create five questions for a job interview for a senior python software engineer position."

curl $LAMBDA_URL -d "{ \"prompt\": \"$PROMPT\" }" \

| jq -r '.choices[0].text, .usage'

Like before, the first run will take longer.

chmod +x test_remote.sh

LAMBDA_URL=your_url ./test_remote.sh

You should receive back an output similar to the one at the top of the article.

If you want to see the full project code, see github.

Performance

Cost and speed

You may have noticed that I chose to run the model under the configuration:

6 core multi-threading

10GB of RAM

5 minute timeout

How was the performance and how much RAM was used?

Lambda never reserves compute for you and sometimes loads your program “cold”. When you make a request, anything you need to load (like a model) needs to happen at this moment, we call this a cold start.

For the prompt about five interview questions, the execution time after cold start was 17 seconds which is not bad at all.

After that, it consistently took 9 seconds to generate an output, tested in 4 subsequent runs. The average output length was 83 tokens. So, we achieved ~9.2 tokens/second when running hot.

As a comparison, community reported speeds for OpenAI models are: GPT-4 10t/s, GPT-4-turbo 48t/s, GPT-3-turbo 50-100t/s. So we’re matching a decent but not industry leading speed with little effort.

You could run this prompt 4444 times in a free tier. Above that, it would cost $1.2 per 1000 runs. The same 1000 runs with GPT-3.5 turbo would cost $0.2. This makes this endeavour not really cost effective, so low cost shouldn’t be your aim if you’re going to implement this in production.

Optimisation

Could we run the model cheaper? The model uses under 2600MB of memory consistently. We could lower the allocated resources, with a caveat that 6 core CPU is assigned only to Lambdas above ~8846MB.

I ran first test at 3500MB (3 CPU cores / threads) to see how it compares. I didn’t change the code to run only 3 threads which had a downgrading effect. In this case, the lambda always timed out (ran over 5 minutes, which meant the request was cancelled).

After changing the number of threads to the proper 3, the execution almost timed out at cold start, returning results after 4 minutes and 33 seconds. Subsequent 3 runs took 26-40 seconds averaging 3.15 tokens/second. 1000 paid runs would cost $1.45. So we got slower and paid more, not a good optimisation! With this in mind, it makes sense to pay for more memory, because AWS assigns CPU resources proportionally to the reserved RAM amount.

Next steps

As you can see, running an open-source language model is possible even in a regular environment like AWS Lambda. Cost and quality of output is not matching industry-leading services but can serve as a use-case specific solution. If anything, this was a great opportunity to learn about basic LLM ecosystem libraries and tools as well as strengthen you AWS knowledge.

Some possible next steps, if you want to keep exploring and bringing this project closer to production-ready:

Try to run a different (bigger) model or a less compressed version of Microsoft Phi-2.

Create a CDK config to automatically deploy the model and create resources.

Create a GitHub Action to validate the project and run ./deploy.sh.

Try a different serverless platform to run the model.

Subscribe to my newsletter and follow me on social media.

I may write about some of these topics, let me know in the comment if you’re interested in any of them! If you bump into any issues let me know as well.

What do you think about this way of deploying LLMs?

More from me:

x/twitter: horosin_